About Us

Great candidates want a clear purpose: what your mission is, which data problems matter most, and how their work lands in production. When you articulate this well, you attract stronger pipelines and make faster, better hires.

Tips For Impact:

- A Killer Opening Hook: The first line of your job description needs to appeal directly to the type of Data Scientist you want to hire, so make it interesting: "We're not looking for someone to just 'build pipelines.' We're creating a platform that turns messy signals into trusted decisions and we want you to help build it."

- Give Them a Why: Tie the role directly to outcomes: "Your work will power analytics, experimentation, and Machine Learning (ML), accelerating product velocity and revenue impact."

- Make the Invite Inclusive: Tools can be learned; judgment and ownership are harder to teach so keep your job description inclusive. "If you love turning ambiguity into reliable, observable systems (even if you don't meet every requirement), we'd love to hear from you."

Here's An Example Of What You Could Include:

[Company] helps [target audience] achieve [core mission]. We're a globally distributed team of [number] people across [number] countries. We pair product focus with rigorous engineering to deliver useful, usable, and reliable data to everyone who needs it.

Our data success rests on three pillars:

- Modern data practices that prioritize trust, accessibility, and measurable outcomes.

- A culture of experimentation where testing, learning, and iterating drive meaningful innovation.

- A global team that collaborates across time zones to design, ship, and scale solutions that matter.

The Role & Your Key Responsibilities

This section gives candidates clarity on day-to-day expectations and the impact that their role will have on the team and the company overall. The Data Engineer you hire will build systems that enable analytics, ML, and faster iteration across the business.

Here's An Example Of What You Could Include:

As a Data Engineer, you'll design, build, and operate pipelines, models, and platform components across our cloud data stack. You'll partner with Analytics, Data Science, Product, and Engineering to deliver reliable, well-documented datasets and services that actually move metrics.

This role suits someone energized by complex systems, rigorous about data quality, and comfortable owning outcomes end-to-end.

Your Key Responsibilities

- Design and evolve data models (dimensional/lakehouse) for analytics and ML.

- Build & orchestrate ETL/ELT with testing, retries, and observability.

- Stand up and optimize cloud data platforms (warehouse, lake, object storage).

- Implement streaming/CDC pipelines and event schemas for near-real-time use cases.

- Enforce data quality & governance (contracts, lineage, documentation, catalogs).

- Implement security & privacy (RBAC, encryption, PII handling) and cost controls.

- Drive performance, reliability, and SLO/SLI improvements with runbooks and post-mortems.

- Enable experimentation & ML (feature tables, model I/O interfaces) with partner teams.

About You And Your Skills

A strong "About You" helps candidates imagine themselves sitting in the role which will help them decide whether they're a good fit for it or not. Describe the engineer who will excel at modeling, orchestration, and platform thinking, while making it clear you value potential as much as experience points on their CV.

Here's An Example Of What You Could Include:

You're a systems thinker who turns messy inputs into clean, well-modeled datasets. You automate the boring stuff, write tests by default, and communicate clearly across time zones. You balance craft with speed and take pride in platforms that "just work."

Essential Skills:

- Examples of shipped pipelines/models with measurable reliability/latency gains

- Strong SQL and Python; version control and code review discipline

- Orchestration (Airflow/Prefect), transformation (dbt), major cloud data platform

- Data quality/observability mindset; clear documentation and stakeholder comms

Preferred Skills:

- Streaming/event systems (Kafka/Kinesis), CDC, near-real-time patterns

- Infra-as-code (Terraform), CI/CD for data, containers, modern monitoring

- Security/privacy (PII handling), data contracts, governance practices

- Experience enabling DS/ML teams and real-time product features

What We Offer

Benefits signal how much you value impact and long-term growth. Data Engineers look for autonomy, the right tools, and a dependable path to production. While you're evaluating candidates, they're evaluating your data maturity and engineering culture. For Data Engineers, decisions often hinge on impact, career growth, and environment.

Here's An Example Of What You Could Include:

We invest in the environment that helps engineers do their best work. Expect competitive compensation, remote-first flexibility, a learning budget, and comprehensive benefits - plus modern stack access and home-office support to keep you effective wherever you are.

- Competitive Compensation: Salary plus [bonus/equity/performance incentives].

- Work Your Way: Remote-first with flexible hours across time zones.

- Growth & Learning Budget: $1,500+ for courses, certifications, and conferences.

- Comprehensive Benefits: Healthcare, retirement, insurance

- Well-being & Tools: Home-office setup, wellness allowance, modern data stack.

- A Builder's Culture: Experimentation time, blameless post-mortems, clear career paths.

Note: If you can swing it, these are excellent add-ons to attract top Data Engineers.

- Serious hardware: High-spec laptop (fast CPU, ample RAM, NVMe SSD), dual/triple monitors, and ergonomic peripherals.

- Compute & storage budgets: Monthly credits for warehouses/lakes and pipelines (e.g., Snowflake/BigQuery/Redshift, Databricks/EMR), plus durable object storage and lifecycle policies.

- Modern data stack: Orchestration (Airflow/Prefect), transformation (dbt), schema registry, CDC tooling (Debezium/Fivetran), catalogs/lineage (DataHub/Amundsen), and observability (Great Expectations/Monte Carlo).

- Streaming & real-time: Managed Kafka/Kinesis/Pub/Sub with topic governance, consumer lag dashboards, and replay/schemata versioning.

- CI/CD & IaC: GitHub/GitLab pipelines, Terraform modules, ephemeral environments, data contract checks, and automated tests on PRs.

- Security by default: VPC/peered networks or VDI, RBAC/IAM with least privilege, encryption at rest/in transit, secrets management, and audited access to PII.

- Cost & performance guardrails: Warehouse resource monitors, query profiling tools, auto-clustering/partitioning, and spend dashboards.

- Analytics & BI access: Clean, modeled datasets with self-serve Looker/Mode/Amplitude and proper SLAs/SLIs.

- Home office setup: Stipend for desk/chair, webcam/mic/lighting, plus annual refresh for upgrades.

- Quality of life: Noise-canceling headphones, high-speed internet reimbursement, and accessories on request.

How To Apply

Use this section to make the application process feel welcoming, clear, and straightforward. Keep the tone inclusive and encouraging, so candidates from diverse backgrounds feel confident applying.

Here's An Example Of What You Could Include:

Sound like the challenge you're looking for? Brilliant, we're excited to hear from you! Please send:

- Resume/CV and a brief note on why you're excited about the role

- 2-3 artifacts (e.g., a dbt model/PR, pipeline design doc, data quality playbook) with one-paragraph outcomes

- Applications reviewed on a rolling basis until [deadline]

- Shortlisted candidates proceed to: [screening call → practical exercise or architecture walkthrough → panel]

Have any questions? Contact us at [email/contact form].

Your job description is your first sales pitch to data engineers. It needs to show why the role is exciting, the scale of the systems they'll help design, and how candidates can grow with your team - while being specific enough to filter for the level of expertise you're looking for.

- Start With a Compelling Intro: Hook candidates by highlighting your company's mission, the size and complexity of your data, and the impact of reliable pipelines.

- Highlight the "Why": Show how dependable data platforms improve decision-making, reduce costs, and accelerate innovation.

- Be Specific About Stack & Process: Outline key tooling (e.g., dbt, Kafka, Terraform) and which are must-haves vs trainable.

- Show Growth Opportunities: Whether domain ownership, mentorship, or leadership - let them know what's possible.

- Balance Requirements With Flexibility: Focus on core engineering skills while keeping tool-based needs flexible.

- Describe the Team Environment: Rituals like design reviews and retros build learning and reliability.

- Sell the Culture and Benefits: Autonomy, budgets, and modern tools go a long way.

- Use Plain, Human Language: Avoid jargon. Speak like a real person to attract real people.

- Make it Candidate-Centered: Phrase responsibilities as exciting opportunities.

- Add a Call to Action: End with a warm invite and clear application steps.

To write a competitive job description, you need to ensure that you're offering a competitive salary that potential candidates will see as fair. Here are the latest salary insights (which you should localize for region, role level, and cost of living).

- Industry-wide, the average U.S. salary for a Data Engineer is ~$142,000

- Entry Level: $95,000-$120,000

- Mid-Level: $125,000-$155,000

- Senior/Lead: $160,000-$200,000+ (often with equity/bonus)

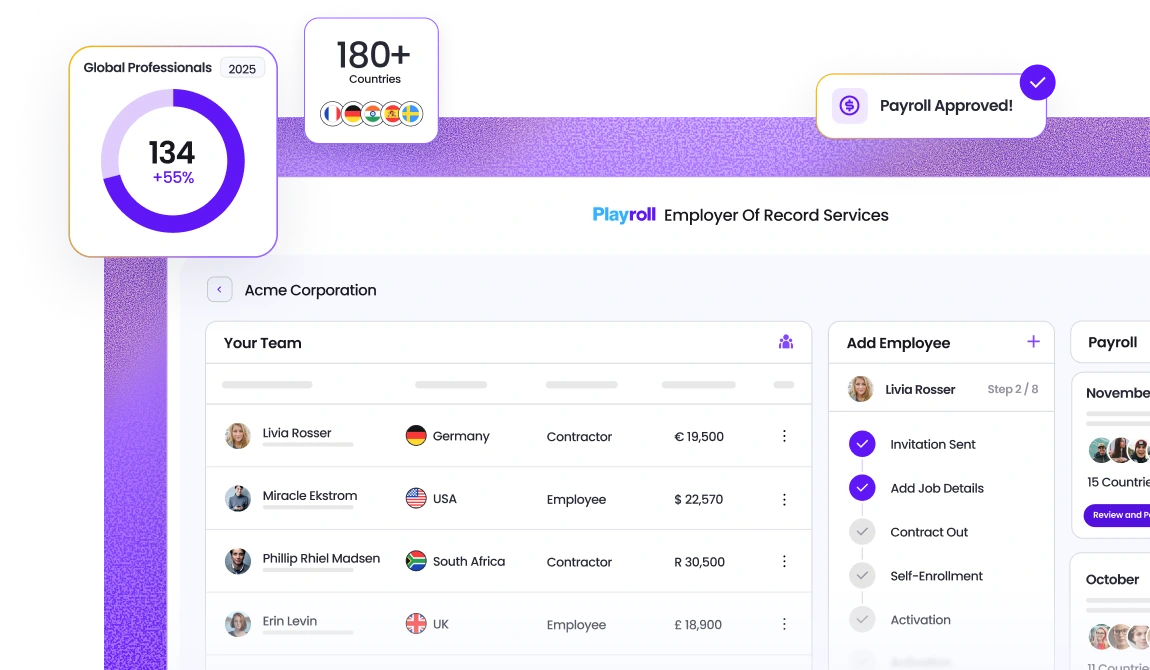

Hiring the right Remote Data Engineer is only the first step. The real challenge often comes afterward: navigating international contracts, compliance requirements, payroll, and tax laws that vary from country to country. For many companies, setting up legal entities across multiple regions isn't realistic. It's costly, slow, and distracts from the real priority: building great products.

At Playroll, we make it simple to hire anywhere in the world. From onboarding and payroll to benefits and labor law requirements, we take care of the heavy lifting so you can focus on scaling your team and driving innovation. It's a smarter, more affordable way to grow your team and bring in the data engineers who will shape your company's future. Book a demo to get started today.

.svg)

.svg)

.svg)

.svg)

.png)

.svg)

.svg)